World Labs, the artificial intelligence (AI) startup, unveiled its first AI system on Monday. The currently unnamed AI system can generate interactive 3D worlds using an image input. These generated worlds turn the 2D visual asset into explorable 3D scenes where users can navigate using a keyboard and mouse. The AI system is currently in […]

ai

Auto Added by WPeMatico

OpenAI Sued by Canadian News Companies Over Alleged Copyright Breaches

Five Canadian news media companies filed a legal action on Friday against ChatGPT owner OpenAI, accusing the artificial-intelligence company of regularly breaching copyright and online terms of use. The case is part of a wave of lawsuits against OpenAI and other tech companies by authors, visual artists, music publishers and other copyright owners over data […]

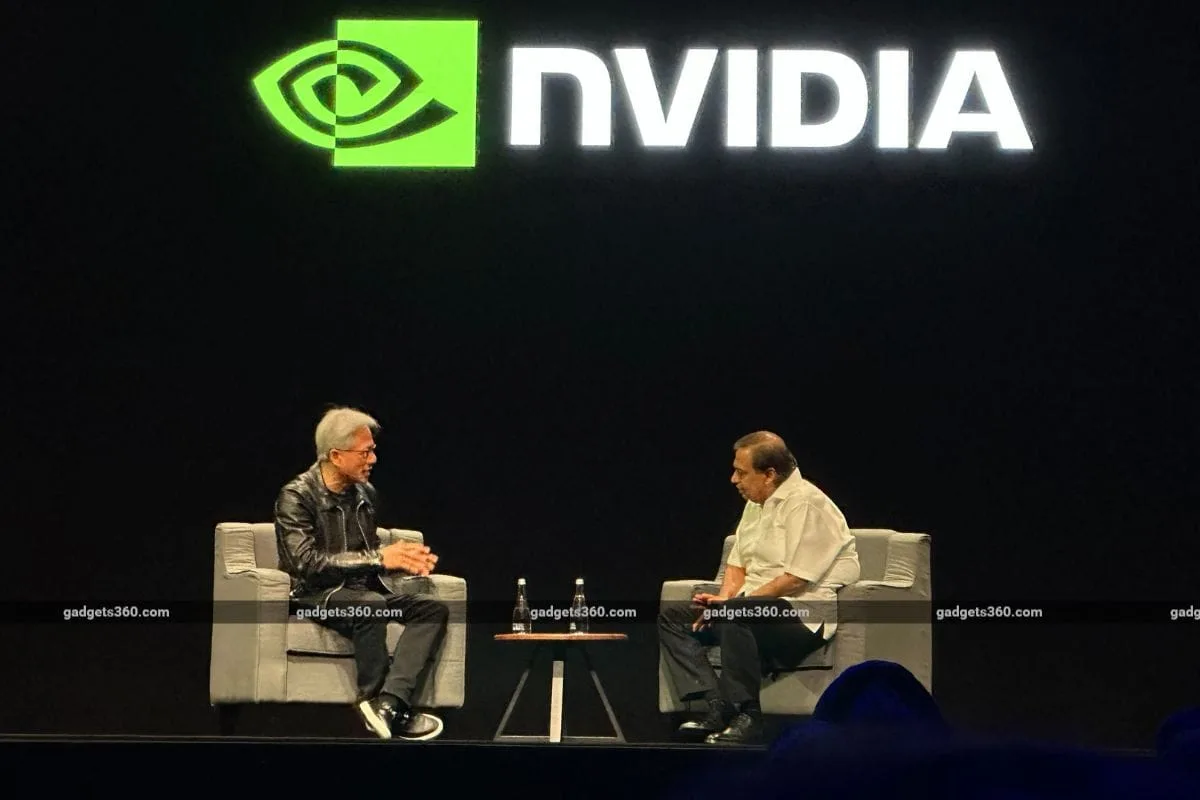

Nvidia CEO Jensen Huang Says ‘The Age of AI Has Started’

Nvidia CEO Jensen Huang said on Saturday that global collaboration and cooperation in technology will continue, even if the incoming U.S. administration imposes stricter export controls on advanced computing products. President-elect Donald Trump, in his first term in office, imposed a series of restrictions on the sale of U.S. technology to China citing national security […]

Bluesky Confirms It Will Not Train Its Generative AI Models on User Posts

Bluesky recently announced that it does not train its generative artificial intelligence (AI) models on user data. The social media platform also highlighted the areas where it uses AI tools and claimed that none of the models have been trained on the public and private posts made by users. The statement was released after several […]

TSMC to Suspend Production of Advanced AI Chips for China From November 11: Report

Taiwan Semiconductor Manufacturing Co (TSMC) has notified Chinese chip design companies that it is suspending production of their most advanced AI chips from Monday, the Financial Times reported, citing three people familiar with the matter. TSMC, the world’s largest contract chipmaker, told Chinese customers it would no longer manufacture AI chips at advanced process nodes […]

Google Introduces Secure AI Framework, Shares Best Practices to Deploy AI Models Safely

Google introduced a new tool to share its best practices for deploying artificial intelligence (AI) models on Thursday. Last year, the Mountain View-based tech giant announced the Secure AI Framework (SAIF), a guideline for not only the company but also other enterprises building large language models (LLMs). Now, the tech giant has introduced the SAIF […]

Microsoft, OpenAI Are Spending Millions on News Outlets to Let Them Try Out AI Tools

Microsoft and OpenAI, in collaboration with the Lenfest Institute for Journalism, announced an AI Collaborative and Fellowship programme on Tuesday. With this programme, the two tech giants will spend upwards of $10 million (roughly Rs. 84.07 crores) in direct funding as well as enterprise credits to use proprietary software. The companies highlighted that the programme […]

Gemini AI Assistant Could Soon Let Users Make Calls, Send Messages From Lockscreen

Gemini AI assistant, the recently added artificial intelligence (AI) virtual assistant for Android smartphones, is reportedly getting new capabilities. Ever since its release earlier this year, one of the major concern was lack of integration with first-party and third-party apps. Over the months, the Mountain View-based tech giant solved some of the issues with various […]

Zoom AI Companion 2.0 With New Capabilities, Custom AI Avatars for Zoom Clips Introduced

Zoom announced new artificial intelligence (AI) features for its platform at its annual Zoomtopia event on Wednesday. The video conferencing platform introduced the AI Companion 2.0, the second generation of the AI assistant which can now handle more tasks. Available across Zoom Workplace, the company’s AI-powered collaboration platform, it can now access Zoom Mail, Zoom […]

Adobe Content Authenticity Web App Introduced; Will Let Creators Add AI Label to Content

Adobe Content Authenticity, a free web app that allows users to easily add content credentials as well as artificial intelligence (AI) labels, was introduced on Tuesday. The platform is aimed at helping creators with their attribution needs. It works on images, videos, and audio files and is integrated with all of the Adobe Creative Cloud […]